by

Praveen Asthana

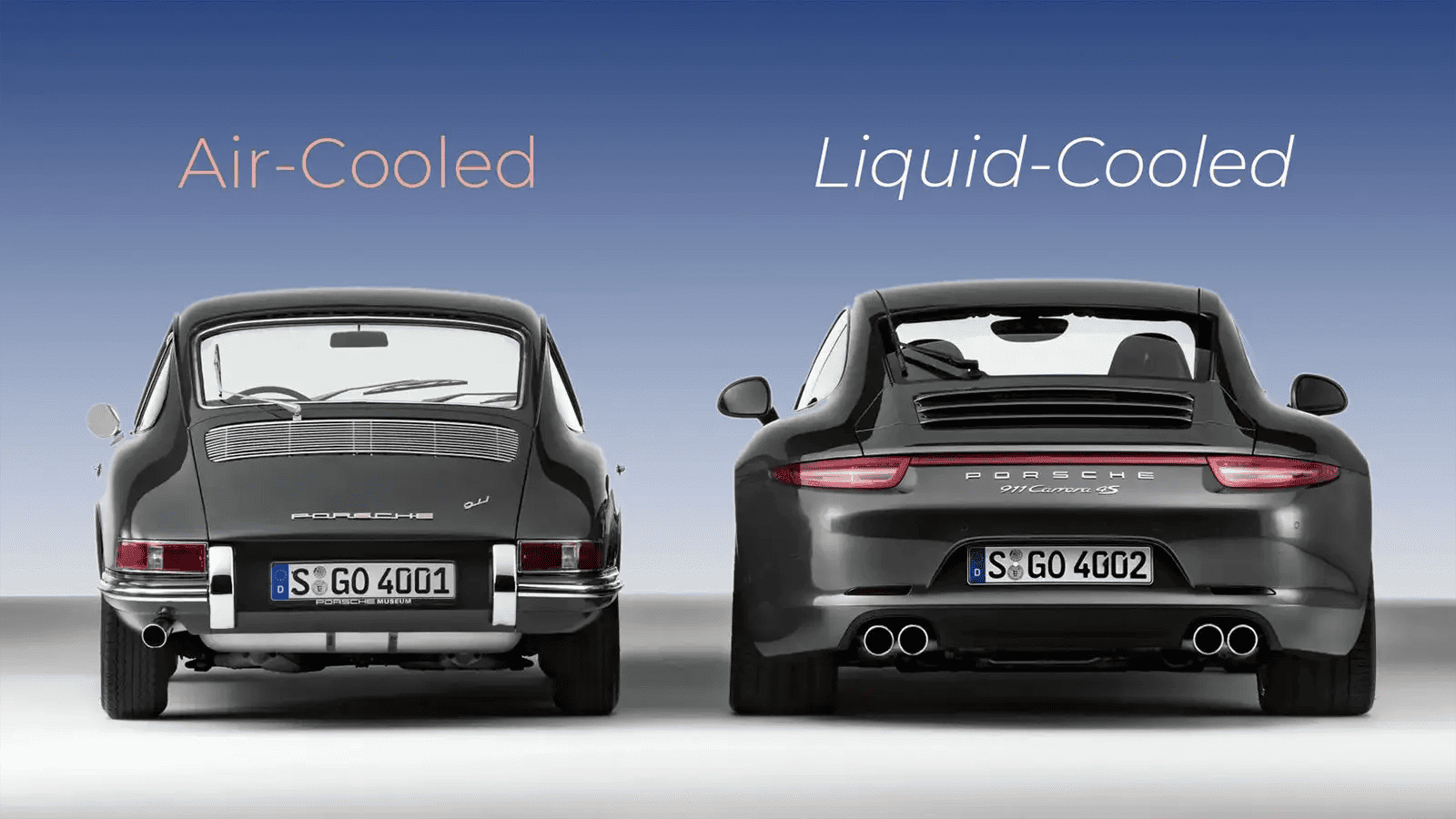

In 1998, Porsche, which had long perfected the air-cooled high-performance engine, switched from air cooled models to liquid cooled models. This was a huge move, and, as expected, Porsche fans were outraged. But Porsche had not done this lightly—many engineers had wanted to continue innovating with the air-cooled engine, which had, after all, propelled Porsche to a number of wins at the grueling Le Mans race. But other engineers recognized that future wins at Le Mans would be increasingly difficult with air cooled technology and pushed for the change to liquid cooled engines. “If we don’t do this, we won’t win Le Mans again. We will be left in the dust from other cars,” was the sentiment.

Another factor in the decision, and possibly the most important one for executives at Porsche, was the increasing pressure from tightening environmental regulations. New emissions and fuel economy rules would be almost impossible to meet with air cooled engines. Porsche would have to switch to liquid cooled engines.

In an interesting parallel, data center operators and IT leaders are facing a similar decision—whether to stay with chilled-air cooled data centers or switch over to liquid cooling. On the surface it appears easy to hang on to air cooling, but switching to liquid cooling opens up new possibilities for competitive advantage.

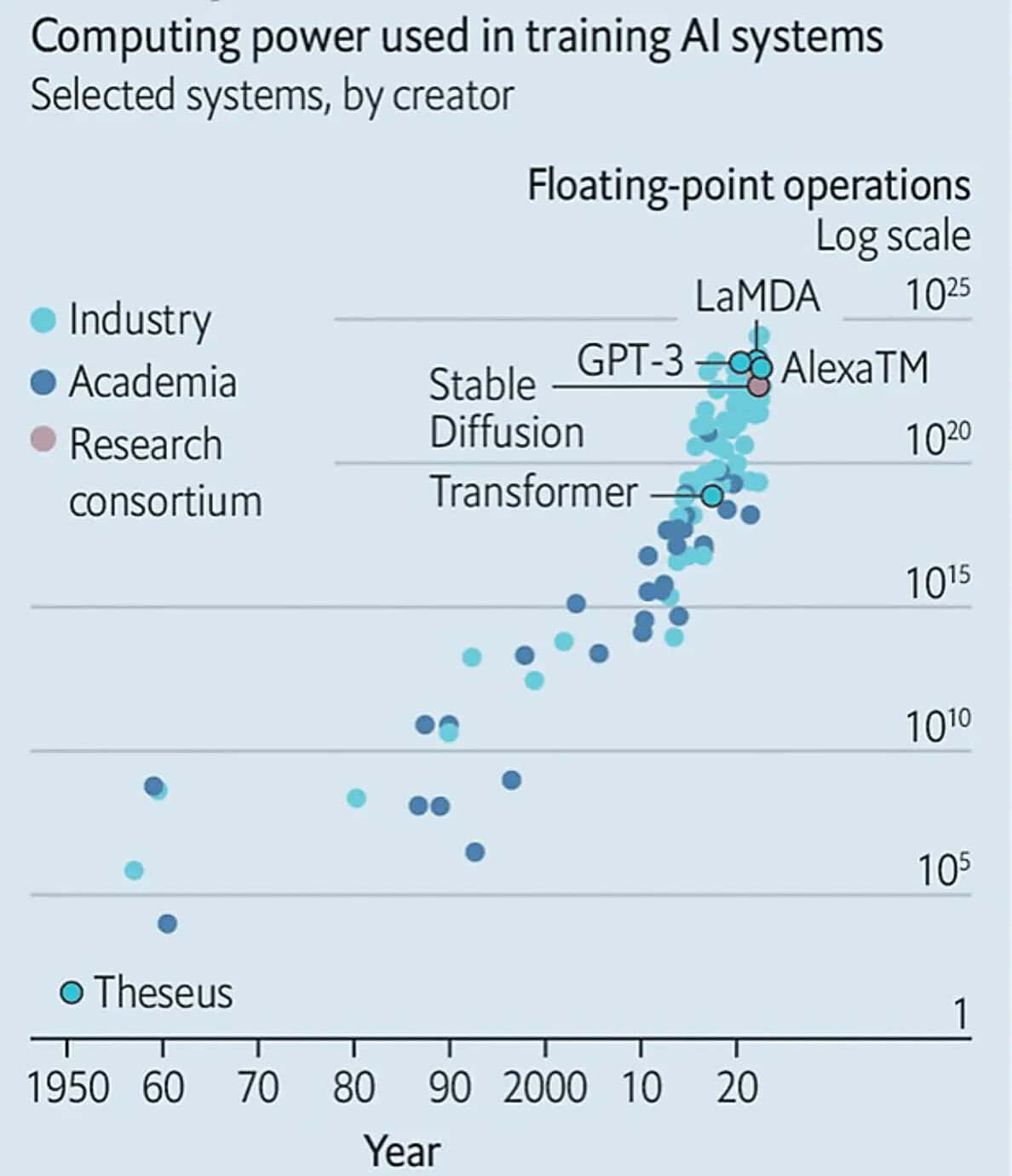

One of the most exciting of these possibilities is implementing AI applications, in particular Generative AI (GenAI), which recently exploded on the IT scene with the advent of ChatGPT and its derivatives. Enterprise applications using GenAI are rapidly being developed in all fields. Indeed, 61% of CIOs polled by Salesforce.com expect to implement GenAI based applications in their enterprises. Eventually, any enterprise that expects to have a competitive advantage will implement AI in their daily operations. This will result in a transformative shift in IT and data centers. Jensen Huang, CEO of NVIDIA, a leading provider of computational systems for GenAI, recently stated: “A trillion dollars of installed global data center infrastructure will transition to accelerated computing as companies race to apply generative AI into every service or business process.” And, according to the Economist magazine, Dell’s Oro Group predicts that the world’s data centers will increase the share of servers dedicated to AI from less than 10% today to about 20% within five years resulting in about 45% of capital spending directed towards AI systems.

But GenAI is extraordinarily computationally intensive, for both training and inference. And as large language models (LLM) become larger and more complex, the need for increased computation (measured in floating-point operations per second) is growing exponentially:

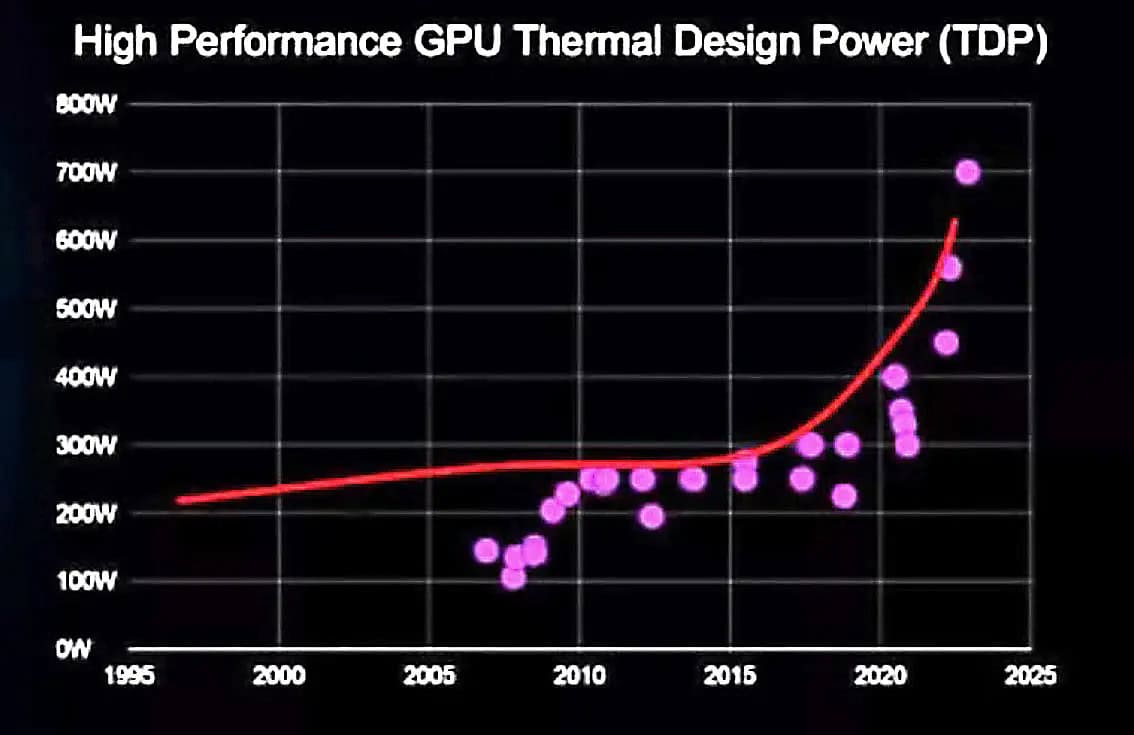

This need for ever increasing teraflop scale computing is directly driving higher performance (and thus hotter) GPUs and CPUs. We are rapidly heading towards 1000W GPUs and beyond.

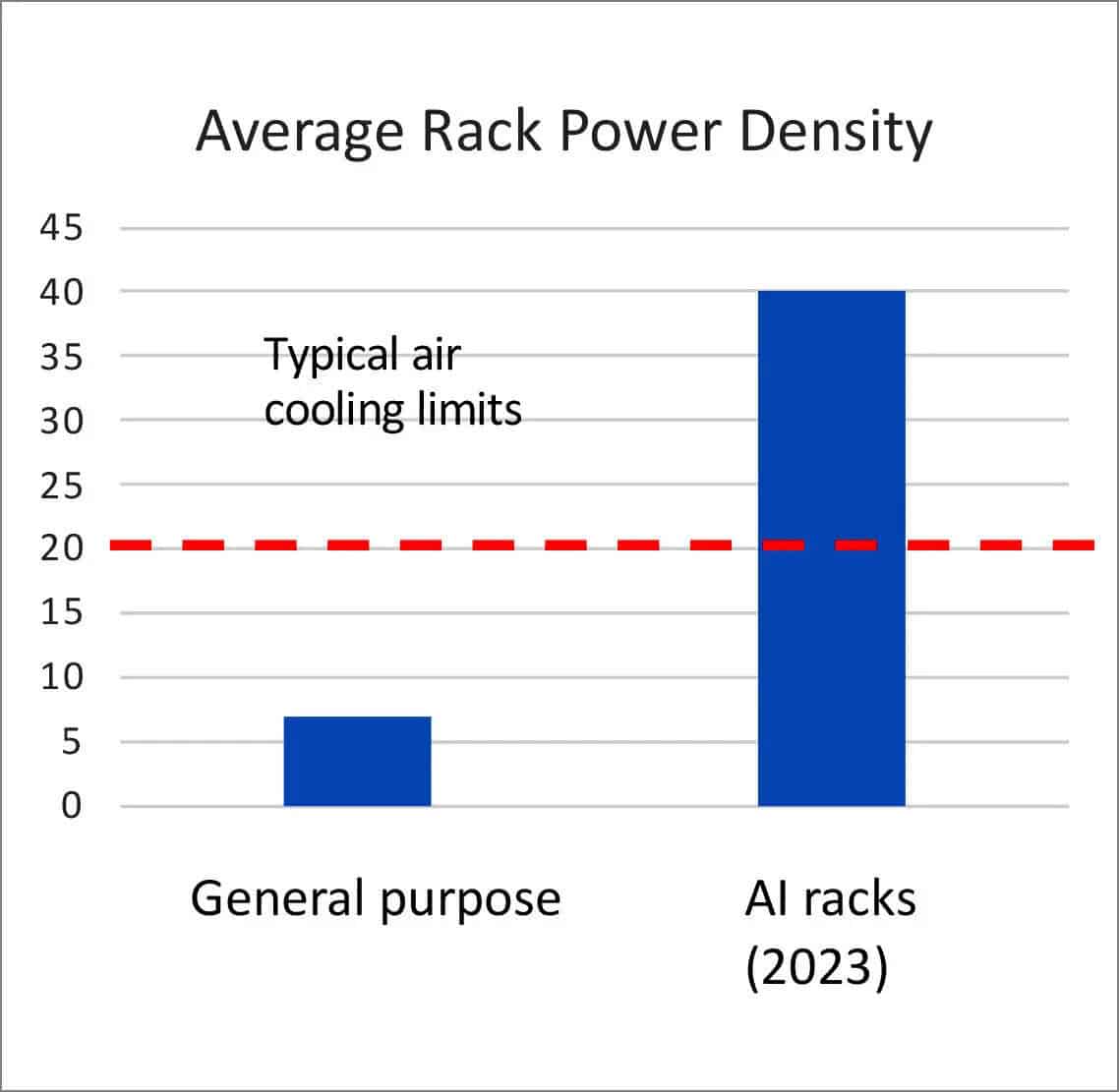

Cooling a full rack of servers containing high power GPUs is extremely challenging with chilled- air cooling methods. Typical racks in data centers are less than 10KW in power density, but racks aimed specifically for AI workloads are heading for 40-50KW, which is beyond the capabilities of normal air cooling.

Of course, you could try to push the limits of air cooling by investing in extremely large HVAC units, larger wet towers and chillers, but that comes at an environmental cost. Air cooling is so inefficient that around 50% of the power used in data centers is wasted on cooling. As a result, AI implementations that are air cooled are using significant amounts of energy and water (which is needed for the chillers). Indeed, the water footprint of AI is raising alarm bells. It is estimated that training GPT-3, even in state-of-the-art data centers, consumes over 700,000 liters of water. Even a simple conversation with ChatGPT can use up 500 ml of water, which adds up when you have hundreds of millions of users. This will only increase as large language models (LLMs) become bigger and more computationally intensive. So, just as Porsche had to switch to liquid cooling driven in part by environmental protection regulations, the same pressure will apply to data centers planning on implementing AI.

For the kinds of power densities required by AI, it is an imperative to switch to liquid cooling, which has been shown to be several thousand times more effective than air in cooling hot spots, and thus significantly more environmentally friendly. Indeed, direct-to-chip liquid cooling solutions as delivered by Accelsius are extremely efficient, with less than 5% of the power used in systems going towards cooling and with zero water footprint (when dry towers are used as part of the system).

Companies that use liquid cooling to cool their AI implementations will have the efficiency and headroom to outpace their competitors. Others will be left in their dust.

Additional reading:

Compute and Energy Consumption Trends in Deep Learning Inference

https://arxiv.org/pdf/2109.05472.pdf